Title: Maximizing File System Performance: Unleashing the True Potential of Your Storage

Introduction:

In today’s data-driven world, where businesses rely heavily on digital assets, file system performance plays a crucial role in ensuring efficient storage and retrieval of information. A well-optimized file system can significantly impact the overall performance of an IT infrastructure, improving productivity and reducing downtime. This article will explore key factors that influence file system performance and provide practical tips to maximize its potential.

Understanding File System Performance:

File system performance refers to how quickly and efficiently a storage system can handle read and write operations. It encompasses various aspects such as data access speed, throughput, latency, scalability, and responsiveness. Achieving optimal performance requires a holistic approach that considers hardware capabilities, software configuration, workload patterns, and maintenance practices.

Factors Influencing File System Performance:

Hardware Infrastructure:

The underlying hardware infrastructure plays a vital role in determining file system performance. Factors such as disk type (HDD or SSD), disk speed, RAID configurations, network bandwidth, and memory capacity directly impact data access speeds. Investing in high-performance hardware components can significantly enhance file system performance.

File System Design:

Choosing the right file system for your specific use case is crucial. Different file systems have varying strengths and weaknesses when it comes to handling large files, small files, random access patterns, or sequential workloads. Understanding your workload requirements will help you select an appropriate file system that maximizes performance.

Disk Partitioning:

Proper disk partitioning can improve file system performance by distributing data across multiple physical disks or partitions effectively. By spreading the load evenly across disks or partitioning based on usage patterns (e.g., separating frequently accessed files from archival data), you can reduce contention and enhance overall performance.

File System Tuning:

Fine-tuning various parameters within the chosen file system can yield significant improvements in performance. Parameters such as block size, buffer cache size, read-ahead settings, and journaling options can be adjusted to match the workload characteristics and optimize performance.

Regular Maintenance:

Performing routine maintenance tasks, such as disk defragmentation, file system checks, and data deduplication, can help maintain peak performance. Regularly monitoring disk health, identifying potential bottlenecks, and addressing them promptly can prevent degradation of file system performance over time.

Maximizing File System Performance:

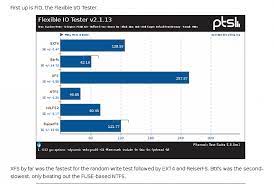

Benchmarking and Monitoring:

Regularly benchmarking your file system’s performance against industry standards and monitoring key metrics like IOPS (Input/Output Operations Per Second), throughput, latency, and response times can help identify areas for improvement. Utilize specialized tools to measure performance accurately.

Workload Analysis:

Understanding the specific workload patterns your file system will encounter is essential for optimizing performance. Analyze read-to-write ratios, file sizes, access patterns (random or sequential), and peak usage times to fine-tune your file system configuration accordingly.

Caching Strategies:

Implementing effective caching strategies at both the operating system level (e.g., page cache) and application level (e.g., memory caching) can significantly reduce disk access times and improve overall performance. Caching frequently accessed data in memory ensures faster retrieval without relying heavily on physical disk operations.

Compression and Deduplication:

Leverage compression techniques to reduce storage footprint while maintaining acceptable performance levels. Additionally, data deduplication technologies identify redundant data blocks across files or systems, further optimizing storage usage without sacrificing performance.

Scalability Considerations:

As your storage needs grow over time, ensure that your file system is designed with scalability in mind. Implement distributed or clustered file systems that allow seamless expansion by adding more storage nodes without compromising performance.

Conclusion:

File system performance is a critical aspect of any IT infrastructure’s efficiency and productivity. By carefully considering hardware components, selecting appropriate file systems, fine-tuning configurations, and implementing maintenance practices, organizations can unlock the true potential of their storage systems. Regular monitoring, workload analysis, and optimization techniques will ensure that file system performance remains at its peak, enabling businesses to handle data-intensive workloads with ease.

8 Frequently Asked Questions About File System Performance Optimization

- How can I improve my file system performance?

- What are the best practices for optimizing file system performance?

- What are the common causes of poor file system performance?

- What is the best way to monitor file system performance?

- What tools can I use to analyze and diagnose file system performance issues?

- How do I troubleshoot slow or degraded file system performance?

- Are there any tips or tricks to improve my file system’s speed and efficiency?

- Are there any settings that can be adjusted to enhance my file system’s overall performance?

How can I improve my file system performance?

Improving file system performance involves a combination of hardware upgrades, software optimizations, and maintenance practices. Here are some practical tips to help you enhance your file system performance:

- Upgrade Hardware Components: Consider upgrading your storage devices to faster and more efficient options such as solid-state drives (SSDs) or high-speed hard disk drives (HDDs). Additionally, increasing memory capacity and network bandwidth can improve data access speeds.

- Choose the Right File System: Select a file system that aligns with your workload requirements. Different file systems have varying strengths and weaknesses. Research and choose one that is optimized for your specific use case, whether it’s handling large files, small files, random access patterns, or sequential workloads.

- Optimize Disk Partitioning: Properly partitioning disks can distribute data effectively across multiple physical disks or partitions. Consider separating frequently accessed files from archival data or evenly distributing the load across disks to reduce contention and enhance overall performance.

- Fine-Tune File System Parameters: Adjust various file system parameters to match your workload characteristics. Parameters such as block size, buffer cache size, read-ahead settings, and journaling options can be optimized to improve performance.

- Perform Regular Maintenance Tasks: Schedule routine maintenance tasks such as disk defragmentation, file system checks (e.g., running fsck), and data deduplication to maintain optimal performance over time. Regularly monitor disk health and address potential bottlenecks promptly.

- Benchmark and Monitor Performance: Regularly benchmark your file system’s performance against industry standards using specialized tools. Monitor key metrics like IOPS (Input/Output Operations Per Second), throughput, latency, and response times to identify areas for improvement.

- Analyze Workload Patterns: Understand the specific workload patterns your file system encounters to fine-tune its configuration accordingly. Analyze read-to-write ratios, file sizes, access patterns (random or sequential), and peak usage times to optimize performance.

- Implement Caching Strategies: Utilize caching techniques at both the operating system and application levels. Implementing page caching at the OS level and memory caching at the application level can reduce disk access times and improve overall performance.

- Leverage Compression and Deduplication: Use compression techniques to reduce storage footprint while maintaining acceptable performance levels. Additionally, consider implementing data deduplication technologies to identify redundant data blocks across files or systems, optimizing storage usage without sacrificing performance.

- Consider Scalability: Plan for future growth by ensuring your file system is designed with scalability in mind. Implement distributed or clustered file systems that allow seamless expansion by adding more storage nodes without compromising performance.

By following these tips and continuously monitoring and optimizing your file system, you can enhance its performance, leading to improved efficiency, productivity, and a better overall user experience.

What are the best practices for optimizing file system performance?

Optimizing file system performance involves implementing a combination of hardware, software, and maintenance practices. Here are some best practices to consider:

- Choose the Right File System: Select a file system that aligns with your specific workload requirements. Different file systems have varying strengths and weaknesses, so understanding your workload patterns (e.g., large files, small files, random access, sequential access) will help you make an informed decision.

- Invest in High-Performance Hardware: Ensure your hardware infrastructure meets the performance demands of your file system. Consider factors such as disk type (HDD or SSD), disk speed, RAID configurations, network bandwidth, and memory capacity. Investing in faster disks or solid-state drives (SSDs) can significantly enhance data access speeds.

- Proper Disk Partitioning: Distribute data across multiple physical disks or partitions effectively to balance the load and reduce contention. Consider separating frequently accessed files from archival or less frequently accessed data to optimize performance.

- File System Tuning: Fine-tune various parameters within the file system to match workload characteristics and optimize performance. Adjust block size, buffer cache size, read-ahead settings, journaling options, and other relevant parameters based on your specific requirements.

- Regular Maintenance Tasks: Perform routine maintenance tasks to keep the file system in optimal condition. This includes disk defragmentation (for HDDs), regular file system checks (e.g., with fsck), and data deduplication to minimize storage usage.

- Benchmarking and Monitoring: Regularly benchmark your file system’s performance against industry standards using specialized tools. Monitor key metrics such as IOPS (Input/Output Operations Per Second), throughput, latency, and response times to identify areas for improvement.

- Analyze Workload Patterns: Understand the specific workload patterns that your file system encounters to fine-tune its configuration accordingly. Analyze read-to-write ratios, file sizes, access patterns (random or sequential), and peak usage times to optimize performance.

- Implement Caching Strategies: Utilize caching mechanisms at both the operating system and application levels to reduce disk access times. Implement page caching at the OS level and consider memory caching within applications to store frequently accessed data in memory for faster retrieval.

- Compression and Deduplication: Leverage compression techniques to reduce storage footprint without sacrificing performance. Implement data deduplication technologies to identify and eliminate redundant data blocks, optimizing storage usage.

- Scalability Planning: Design your file system with scalability in mind. Consider distributed or clustered file systems that allow seamless expansion by adding more storage nodes as your needs grow over time.

By following these best practices, you can optimize file system performance, enhance data access speeds, improve overall efficiency, and ensure smooth operations within your IT infrastructure.

What are the common causes of poor file system performance?

Poor file system performance can be attributed to several common causes. Understanding these causes can help identify and address performance issues effectively. Here are some of the most common factors that contribute to poor file system performance:

- Insufficient Hardware Resources: Inadequate hardware resources, such as slow or overloaded disks, limited memory capacity, or insufficient network bandwidth, can significantly impact file system performance. Inadequate hardware may result in slower data access speeds, increased latency, and reduced throughput.

- Misaligned File System and Workload: Choosing an inappropriate file system for a particular workload can lead to poor performance. Different file systems have varying strengths and weaknesses, and using the wrong one for a specific workload can result in suboptimal performance.

- Fragmented File System: Over time, file systems can become fragmented due to continuous read/write operations and file deletions/creations. Fragmentation causes data blocks to be scattered across the storage medium, leading to slower access times as the disk heads need to move more frequently.

- Suboptimal File System Configuration: Incorrect configuration settings within the file system can negatively impact performance. Parameters such as block size, buffer cache size, read-ahead settings, journaling options, or caching policies should be appropriately configured based on workload characteristics.

- Lack of Regular Maintenance: Neglecting routine maintenance tasks like disk defragmentation, file system checks (e.g., fsck), or data deduplication can gradually degrade file system performance over time. These maintenance activities help optimize storage utilization and prevent bottlenecks.

- Network Congestion: File systems accessed over a network (e.g., Network Attached Storage) may experience poor performance if there is excessive network congestion or insufficient bandwidth available for data transfer.

- Security Measures: While security measures like encryption or access control lists are essential for protecting data integrity and privacy, they can introduce additional overheads that impact overall file system performance.

- Inefficient File Access Patterns: Workloads that exhibit inefficient file access patterns, such as excessive random access or frequent small file operations, can lead to poor file system performance. File systems are designed to handle specific access patterns efficiently, and deviating from those patterns can result in decreased performance.

- Outdated Software or Drivers: Using outdated or incompatible software versions, drivers, or firmware can lead to compatibility issues and hinder optimal file system performance. It is crucial to keep the software stack up to date with the latest patches and updates.

- Hardware Failures: Faulty hardware components, such as failing disks or network devices, can cause severe performance degradation in a file system. Regular monitoring of hardware health is essential to identify and replace faulty components promptly.

Identifying the specific cause(s) of poor file system performance requires a thorough analysis of the infrastructure, workload patterns, and configuration settings. By addressing these common causes proactively and implementing appropriate optimizations, organizations can improve their file system performance and ensure smooth data operations.

What is the best way to monitor file system performance?

Monitoring file system performance is essential to ensure optimal operation and identify potential bottlenecks or issues. Here are some effective methods for monitoring file system performance:

- Operating System Tools: Most operating systems provide built-in tools to monitor file system performance. For example, in Windows, you can use Performance Monitor (PerfMon) or Resource Monitor to track disk I/O, read/write speeds, and other relevant metrics. In Linux-based systems, tools like iostat, vmstat, or sar can provide detailed information on disk activity and performance.

- File System-specific Tools: Some file systems have their own monitoring tools that offer deeper insights into their performance characteristics. For instance, ZFS has the zpool iostat command to monitor I/O activity and other metrics specific to the ZFS file system.

- Third-Party Monitoring Software: Numerous third-party software solutions specialize in monitoring file system performance. These tools often offer more comprehensive features, customizable dashboards, and real-time alerts. Examples include SolarWinds Storage Resource Monitor, Nagios Core with plugins for disk monitoring, or Datadog’s infrastructure monitoring capabilities.

- Performance Counters: Many file systems expose performance counters that can be accessed programmatically or through dedicated monitoring tools. These counters provide detailed information about various aspects of file system performance such as read/write operations per second (IOPS), throughput, latency, cache hit ratios, and more.

- Disk Monitoring Tools: In addition to file system-specific monitoring tools, it’s crucial to monitor the health and performance of physical disks or storage arrays underlying the file system. Disk health indicators like SMART (Self-Monitoring Analysis and Reporting Technology) attributes can be monitored using utilities like smartmontools in Linux or third-party software for Windows.

- Log Analysis: Analyzing log files generated by the operating system or specific applications can help identify any errors or warnings related to file system operations. Tools like ELK Stack (Elasticsearch, Logstash, and Kibana) or Splunk can assist in aggregating and visualizing log data for efficient analysis.

- Real-Time Alerts: Setting up real-time alerts based on predefined thresholds or anomalies can help proactively identify performance issues. This ensures timely intervention before they impact critical operations. Many monitoring tools mentioned above offer alerting capabilities.

Remember that the choice of monitoring method depends on the specific file system, operating system, infrastructure setup, and organizational requirements. It’s advisable to combine multiple monitoring approaches to gain a comprehensive view of file system performance and promptly address any potential issues.

What tools can I use to analyze and diagnose file system performance issues?

When it comes to analyzing and diagnosing file system performance issues, there are several tools available that can provide valuable insights into the underlying causes. Here are some commonly used tools:

- iostat: A command-line tool available on Unix-like systems that provides statistics on input/output (I/O) operations, including disk utilization, throughput, and latency. It helps identify potential bottlenecks and monitor disk performance.

- sar: Another command-line tool found in Unix-like systems, sar collects and reports system activity data, including CPU usage, memory usage, network activity, and disk I/O. It can be used to analyze historical performance trends and identify patterns related to file system performance.

- Perf: A powerful profiling tool available on Linux systems that offers in-depth analysis of system performance. Perf provides detailed information about CPU utilization, cache misses, I/O events, context switches, and more. It can help pinpoint performance bottlenecks related to file system operations.

- dstat: A versatile command-line tool that combines various system monitoring features into a single interface. dstat displays real-time statistics on CPU usage, memory consumption, disk activity, network traffic, and more. It is particularly useful for identifying correlations between different metrics affecting file system performance.

- Windows Performance Monitor: A built-in tool in Windows operating systems that allows you to monitor various aspects of system performance. With Performance Monitor (perfmon), you can track disk I/O metrics like read/write rates, response times, queue lengths, and other relevant counters specific to file systems.

- fsstat: An open-source utility designed specifically for analyzing file system statistics on Unix-like systems. fsstat provides detailed information about file system metadata operations such as reads/writes per second (IOPS), block sizes used by files/directories, fragmentation levels, and other useful metrics.

- Wireshark: A network protocol analyzer that captures packet-level data. While not directly focused on file system performance, Wireshark can help identify network-related issues that may impact file system operations, such as high latency, packet loss, or misconfigurations.

Remember, the choice of tool depends on your operating system and specific requirements. It’s recommended to explore the documentation and capabilities of each tool to determine which one best suits your needs for analyzing and diagnosing file system performance issues effectively.

How do I troubleshoot slow or degraded file system performance?

When experiencing slow or degraded file system performance, it’s important to troubleshoot the issue promptly to minimize any impact on productivity. Here are some steps you can take to identify and address the problem:

- Identify the Scope: Determine if the slow performance is affecting a specific file system, a particular application, or the entire system. This will help narrow down the potential causes.

- Monitor System Resources: Check resource utilization levels such as CPU usage, memory usage, and disk I/O. High resource consumption can lead to performance degradation. Use monitoring tools to identify any bottlenecks.

- Check Hardware Health: Ensure that all hardware components (disks, controllers, network interfaces) are functioning properly. Monitor disk health using SMART (Self-Monitoring, Analysis, and Reporting Technology) tools and look for any signs of failure or degradation.

- Analyze Workload Patterns: Examine the workload patterns on your file system. Identify if there are any specific files or directories causing slowdowns or if certain applications are generating excessive I/O operations. This analysis will help pinpoint potential areas of improvement.

- Review File System Configuration: Verify that your file system is configured optimally for your workload requirements. Check parameters such as block size, read-ahead settings, caching options, and journaling modes to ensure they align with your workload patterns.

- Evaluate Disk Partitioning: Assess how data is distributed across disks or partitions within your file system. If one partition is heavily loaded while others remain underutilized, consider redistributing data to balance the load evenly.

- Check for Fragmentation: Fragmentation can significantly impact file system performance over time. Use defragmentation tools to consolidate fragmented files and optimize data placement on disks.

- Review Network Connectivity: If your file system relies on network-attached storage (NAS), check for network connectivity issues such as high latency or packet loss that may be affecting performance.

- Update Software and Drivers: Ensure that your file system software, drivers, and firmware are up to date. Manufacturers often release updates that address performance issues or provide optimizations.

- Consider File System Tuning: Research and implement recommended tuning options specific to your file system. Adjusting parameters like buffer cache size, disk scheduler settings, or read-ahead values can have a positive impact on performance.

- Analyze Third-Party Applications: If you have any third-party applications or services interacting with your file system, review their configurations and logs for any potential conflicts or performance issues.

- Seek Expert Assistance: If the issue persists or if you’re unsure about the root cause, consider consulting with IT professionals or contacting technical support from your file system vendor for further assistance.

By following these troubleshooting steps, you can identify and resolve the underlying causes of slow or degraded file system performance, ultimately improving the efficiency and responsiveness of your storage infrastructure.

Are there any tips or tricks to improve my file system’s speed and efficiency?

Certainly! Here are some tips and tricks to improve your file system’s speed and efficiency:

- Choose the Right File System: Select a file system that aligns with your specific workload requirements. Different file systems have varying strengths and weaknesses, so consider factors such as file size, access patterns (random or sequential), and the nature of your data to optimize performance.

- Optimize Disk Partitioning: Distribute data across multiple physical disks or partitions effectively. By spreading the load evenly or partitioning based on usage patterns, you can reduce contention and enhance overall performance.

- Fine-Tune File System Parameters: Adjust various parameters within the file system to match your workload characteristics. Consider block size, buffer cache size, read-ahead settings, and journaling options to optimize performance.

- Utilize Caching Strategies: Implement effective caching strategies at both the operating system and application level. Caching frequently accessed data in memory reduces disk access times and improves overall performance.

- Regular Maintenance: Perform routine maintenance tasks such as disk defragmentation, file system checks, and data deduplication to maintain peak performance. Regularly monitor disk health, identify potential bottlenecks, and address them promptly.

- Benchmark and Monitor Performance: Regularly benchmark your file system’s performance against industry standards using specialized tools. Monitor key metrics like IOPS (Input/Output Operations Per Second), throughput, latency, and response times to identify areas for improvement.

- Analyze Workload Patterns: Understand the specific workload patterns your file system will encounter to fine-tune configuration settings accordingly. Analyze read-to-write ratios, file sizes, access patterns (random or sequential), and peak usage times for optimization opportunities.

- Implement Compression and Deduplication: Leverage compression techniques to reduce storage footprint without sacrificing performance levels. Additionally, consider implementing data deduplication technologies to identify redundant data blocks across files or systems for further storage optimization.

- Ensure Scalability: Design your file system with scalability in mind to accommodate future storage needs. Implement distributed or clustered file systems that allow seamless expansion by adding more storage nodes without compromising performance.

- Stay Up-to-Date: Keep your file system software and hardware drivers up-to-date with the latest patches and updates. This ensures compatibility, bug fixes, and performance enhancements.

By applying these tips and tricks, you can enhance the speed and efficiency of your file system, resulting in improved overall performance and productivity for your IT infrastructure.

Are there any settings that can be adjusted to enhance my file system’s overall performance?

Yes, there are several settings that can be adjusted to enhance your file system’s overall performance. Here are some key settings to consider:

- Block Size: The block size determines the unit of data that is read from or written to the disk. Choosing an appropriate block size can impact performance, especially for specific workloads. Larger block sizes are more efficient for sequential access, while smaller block sizes are better for random access patterns.

- Buffer Cache Size: The buffer cache is an area of memory used to hold frequently accessed data. Increasing the buffer cache size allows more data to be cached in memory, reducing disk I/O operations and improving overall performance.

- Read-Ahead Settings: Read-ahead is a technique where the file system anticipates future read requests and preloads data into memory. Adjusting the read-ahead settings can optimize performance based on the workload characteristics.

- Journaling Options: Journaling is a mechanism used by many file systems to ensure data consistency in case of system crashes or power failures. Different journaling options have varying levels of overhead and performance impact. Choosing an appropriate journaling mode based on your needs can improve performance.

- File System Compression: Enabling file system compression can reduce storage space requirements and improve performance by reducing disk I/O operations. However, it’s important to evaluate the trade-off between compression efficiency and CPU utilization.

- Disk Scheduler: The disk scheduler determines how read/write requests are prioritized and scheduled on the disk. Different schedulers have varying algorithms that can impact performance under different workloads. Experimenting with different schedulers may help optimize disk I/O operations.

- File System Check Interval: Regular file system checks (fsck) ensure data integrity but can impact performance during execution. Adjusting the frequency of these checks based on your specific requirements can minimize any potential performance degradation.

It’s important to note that adjusting these settings should be done with caution, as improper configurations can lead to unintended consequences. It’s recommended to thoroughly understand the implications of each setting and perform thorough testing before implementing changes in a production environment.